Table of contents

Generally, there is always more than one way to solve a problem in computer science with different algorithms. Therefore, it is highly required to use a method to compare the solutions in order to judge which one is more optimal. The method must be:

Independent of the machine and its configuration, on which the algorithm is running on. 💻

Shows a direct correlation with the number of inputs. 📈

Can distinguish two algorithms clearly without ambiguity. ❓

There are two such methods used, time complexity and space complexity which are discussed below:

Time Complexity ⏰

The time complexity of an algorithm quantifies the amount of time taken by an algorithm to run as a function of the length of the input. Note that the time to run is a function of the length of the input and not the actual execution time of the machine on which the algorithm is running on.

Definition–

The valid algorithm takes a finite amount of time for execution. The time required by the algorithm to solve a given problem is called the time complexity of the algorithm. Time complexity is a very useful measure in algorithm analysis.

It is the time needed for the completion of an algorithm. To estimate the time complexity, we need to consider the cost of each fundamental instruction and the number of times the instruction is executed.

Example 1: Addition of two scalar variables.

Algorithm ADD SCALAR(A, B)

//Description: Perform arithmetic addition of two numbers

//Input: Two scalar variables A and B

//Output: variable C, which holds the addition of A and B

C <- A + B

return C

The addition of two scalar numbers requires one addition operation. The time complexity of this algorithm is constant, so T(n) = O(1).

In order to calculate time complexity of an algorithm, it is assumed that a constant time c is taken to execute one operation, and then the total operations for an input length of N are calculated. Consider an example to understand the process of calculation: Suppose a problem is to find whether a pair (X, Y) exists in an array, A, of N elements whose sum is Z. The simplest idea is to consider every pair and check if it satisfies the given condition or not.

The pseudo-code is as follows:

int a[n];

for(int i = 0;i < n;i++)

cin >> a[i]

for(int i = 0;i < n;i++)

for(int j = 0;j < n;j++)

if(i!=j && a[i]+a[j] == z)

return true

return false

Assuming that each of the operations in the computer takes approximately constant time, let it be c. The number of lines of code executed actually depends on the value of Z. During analysis of the algorithm, mostly the worst-case scenario is considered, i.e., when there is no pair of elements with a sum equal to Z. In the worst case:

N * c operations are required for input.

The outer loop (i loop) runs N times.

For each i, the inner loop (j loop) runs N times.

So the total execution time is N c + N N c + c. Now ignore the lower order terms since the lower order terms are relatively insignificant for large input, therefore only the highest order term is taken (without constant) which is N N in this case. Different notations are used to describe the limiting behavior of a function, but since the worst case is taken, so big-O notation will be used to represent the time complexity.

Hence, the time complexity is

O(N^2) for the above algorithm. Note that the time complexity is solely based on the number of elements in array A, i.e., the input length, so if the length of the array increases, the time of execution will also increase.

Order of growth is how the time of execution depends on the length of the input. In the above example, it is clearly evident that the time of execution quadratically depends on the length of the array. Order of growth will help to compute the running time with ease.

Another Example: Let’s calculate the time complexity of the below algorithm:

count = 0

for (int i = N; i > 0; i /= 2)

for (int j = 0; j < i; j++)

count++;

This is a tricky case. At first glance, it seems like the complexity is O(N * log N). N for the j's loop and log(N) for the i's loop. But it’s wrong. Let’s see why.

Think about how many times count++ will run.

When i = N, it will run N times.

When i = N / 2, it will run N / 2 times.

When i = N / 4, it will run N / 4 times.

And so on.

The total number of times count++ will run is N + N/2 + N/4 + … + 1 = 2 * N. So the time complexity will be O(N).

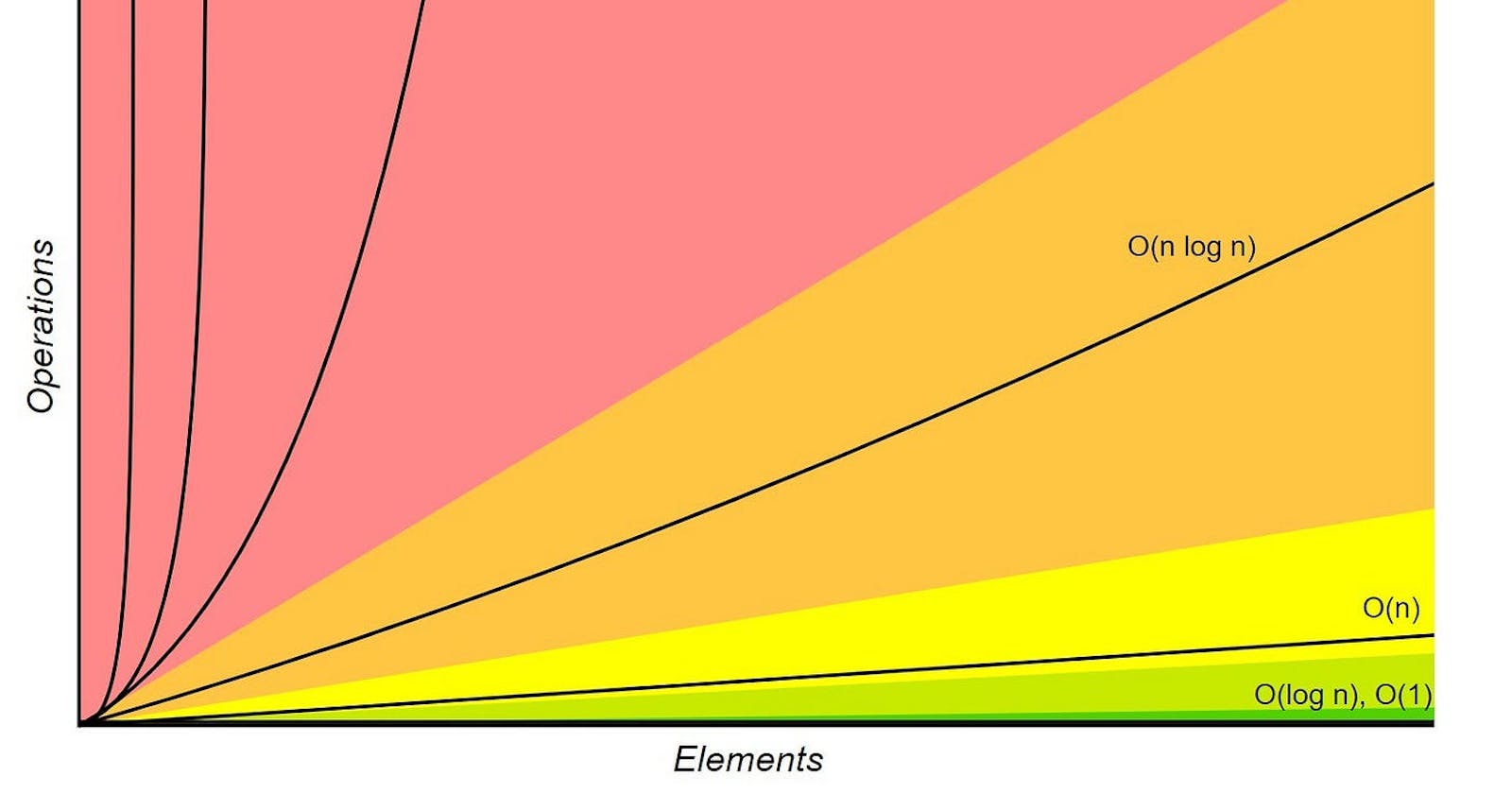

Some general time complexities are listed below with the input range for which they are accepted in competitive programming:

10^12: O(N!)

15-18: O(2^N * N)

18-22: O(2^N * N)

30-40: O(2^(N/2) * N)

100: O(N^4)

400: O(N^3)

2^K: O(N^2 * log N)

10K: O(N^2)

1M: O(N * log N)

100M: O(N), O(log N), O(1)

Space Complexity 📏

Definition –

Problem-solving using a computer requires memory to hold temporary data or final results while the program is in execution. The amount of memory required by the algorithm to solve a given problem is called the space complexity of the algorithm.

The space complexity of an algorithm quantifies the amount of space taken by an algorithm to run as a function of the length of the input. Consider the following example: Suppose a problem is to find the frequency of array elements.

It is the amount of memory needed for the completion of an algorithm.

To estimate the memory requirement, we need to focus on two parts:

(1) A fixed part: It is independent of the input size. It includes memory for instructions (code), constants, variables, etc.

(2) A variable part: It is dependent on the input size. It includes memory for the recursion stack, referenced variables, etc.

Example: Addition of two scalar variables

Algorithm ADD SCALAR(A, B)

//Description: Perform arithmetic addition of two numbers

//Input: Two scalar variables A and B

//Output: variable C, which holds the addition of A and B

C <- A + B

return C

The addition of two scalar numbers requires one extra memory location to hold the result. Thus the space complexity of this algorithm is constant, hence S(n) = O(1).

The pseudo-code is as follows:

int freq[n];

int a[n];

for(int i = 0;

i < n; i++)

{

cin >> a[i];

freq[a[i]]++;

}

Here, two arrays of length N and variable i are used in the algorithm, so the total space used is N c + N c + 1 c = 2N c + c, where c is a unit space taken. For many inputs, constant c is insignificant, and it can be said that the space complexity is O(N).

There is also auxiliary space, which is different from space complexity. The main difference is that while space complexity quantifies the total space used by the algorithm, auxiliary space quantifies the extra space that is used in the algorithm apart from the given input. In the above example, the auxiliary space is the space used by the freq[] array because that is not part of the given input. So the total auxiliary space is N * c + c, which is O(N) only.

Understanding time and space complexity is crucial in algorithm analysis as it allows us to evaluate the efficiency of different algorithms and choose the most optimal solution for a given problem. By considering these complexities, we can determine the scalability and performance characteristics of algorithms, enabling us to make informed decisions when designing and implementing software solutions.

That's all about time and space complexity! ⏰📏 I hope this article has provided you with a clear understanding of these concepts in algorithm analysis. Feel free to leave your comments and questions below. Happy coding! 💻😊